While trying to run some kubectl commands I faced this problem:

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

error: exec plugin: invalid apiVersion "client.authentication.k8s.io/v1alpha1"So, in order to reach to a solution let’s start checking:

what’s the version I am running

╰─○ kubectl version --client --output=yaml

clientVersion:

buildDate: "2022-05-03T13:36:49Z"

compiler: gc

gitCommit: 4ce5a8954017644c5420bae81d72b09b735c21f0

gitTreeState: clean

gitVersion: v1.24.0

goVersion: go1.18.1

major: "1"

minor: "24"

platform: darwin/amd64

kustomizeVersion: v4.5.4As hinted by the error, lets see what cluster-info give us

**╰─○ kubectl cluster-info

Kubernetes control plane is running at https://DDC6A7373DC86D737EE035F49DBDF939.gr7.us-east-1.eks.amazonaws.com

CoreDNS is running at https://DDC6A7373DC86D737EE035F49DBDF939.gr7.us-east-1.eks.amazonaws.com/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

KubeDNSUpstream is running at https://DDC6A7373DC86D737EE035F49DBDF939.gr7.us-east-1.eks.amazonaws.com/api/v1/namespaces/kube-system/services/kube-dns-upstream:dns/proxy**The file used to configure access to a cluster is sometimes called a kubeconfig file. This is a generic way of referring to configuration files. It does not mean that there is a file named kubeconfig.

It’s possible to have multiple kube config files specifying the clusters, context, namespaces, and users, and switch between them to use the desired configuration.

kubectl config —kubeconfig=<myfile>

The default kube config lives in config file under $KUBECONFIG variable or $HOME/.kube directory.

To check what is the k8s configuration being used I do

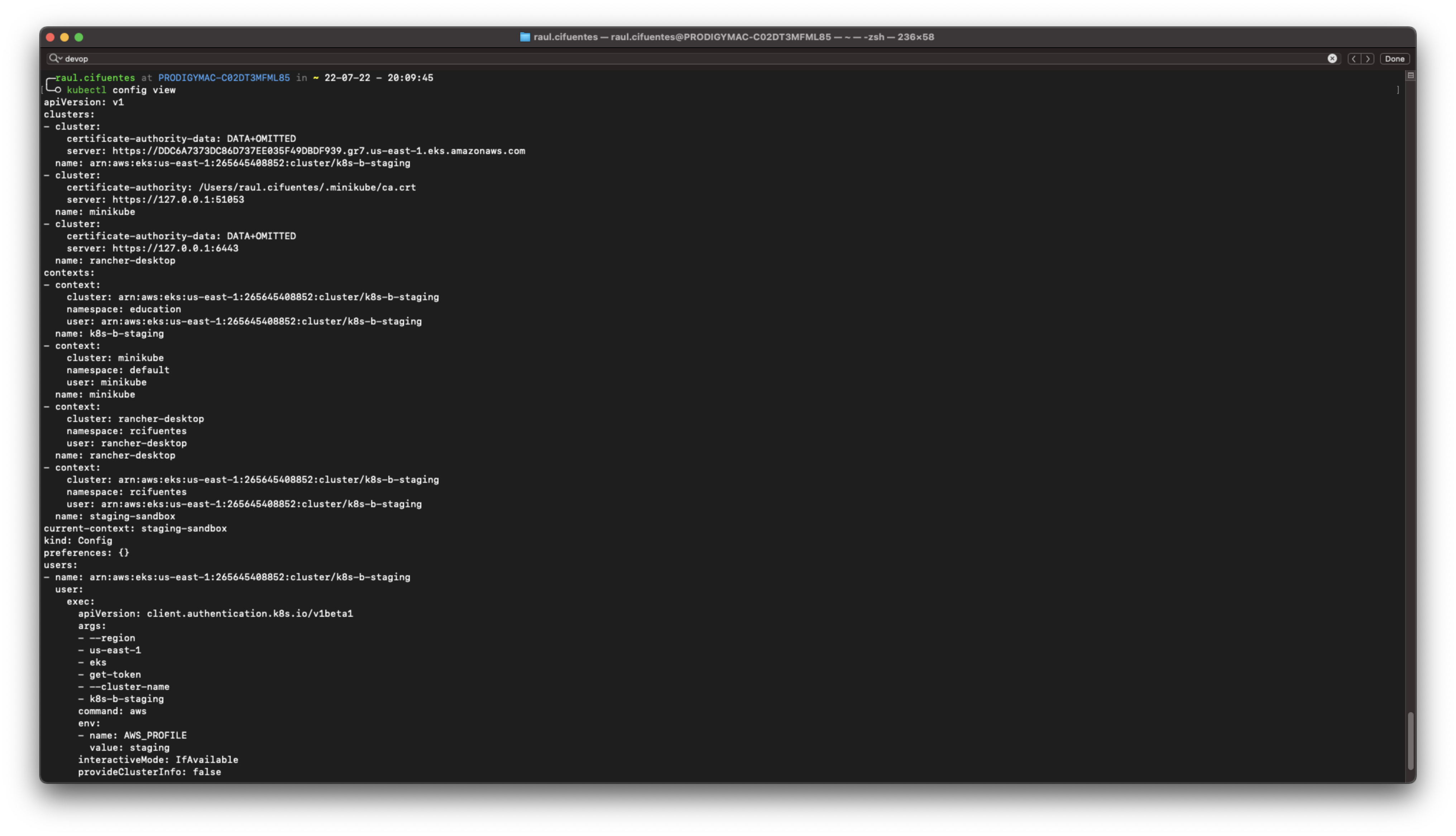

kubectl config viewIn my case it includes

Observe it says that current context is staging-sandbox. So far so good.

current-context: staging-sandboxMy execution of config get-context

─○ kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

k8s-b-staging arn:aws:eks:us-east-1:265645408852:cluster/k8s-b-staging arn:aws:eks:us-east-1:265645408852:cluster/k8s-b-staging education

minikube minikube minikube default

rancher-desktop rancher-desktop rancher-desktop rcifuentes

* staging-sandbox arn:aws:eks:us-east-1:265645408852:cluster/k8s-b-staging arn:aws:eks:us-east-1:265645408852:cluster/k8s-b-staging rcifuentesAfter a little research I found out that editing the ~/.kube/config file and replacing the alpha for beta solves the issue

Before

- name: arn:aws:eks:us-east-1:265645408852:cluster/k8s-b-staging

user:

exec:

apiVersion: client.authentication.k8s.io/v1alpha1After

- name: arn:aws:eks:us-east-1:265645408852:cluster/k8s-b-staging

user:

exec:

apiVersion: client.authentication.k8s.io/v1beta1